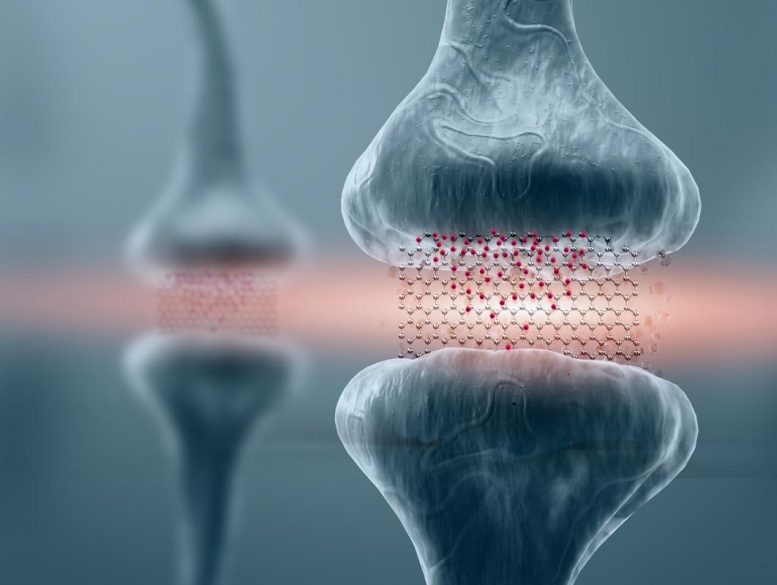

Graphene memristors open doors for biomimetic computing. Credit: Jennifer M. McCann/Penn State

As progress in traditional computing slows, new forms of computing are coming to the forefront. At Penn State, a team of engineers is attempting to pioneer a type of computing that mimics the efficiency of the brain’s neural networks while exploiting the brain’s analog nature.

Modern computing is digital, made up of two states, on-off or one and zero. An analog computer, like the brain, has many possible states. It is the difference between flipping a light switch on or off and turning a dimmer switch to varying amounts of lighting.

Neuromorphic or brain-inspired computing has been studied for more than 40 years, according to Saptarshi Das, the team leader and Penn State assistant professor of engineering science and mechanics. What’s new is that as the limits of digital computing have been reached, the need for high-speed image processing, for instance for self-driving cars, has grown. The rise of big data, which requires types of pattern recognition for which the brain architecture is particularly well suited, is another driver in the pursuit of neuromorphic computing.

“We have powerful computers, no doubt about that, the problem is you have to store the memory in one place and do the computing somewhere else,” Das said.

The shuttling of this data from memory to logic and back again takes a lot of energy and slows the speed of computing. In addition, this computer architecture requires a lot of space. If the computation and memory storage could be located in the same space, this bottleneck could be eliminated.

“We are creating artificial neural networks, which seek to emulate the energy and area efficiencies of the brain,” explained Thomas Shranghamer, a doctoral student in the Das group and first author on a paper recently published in Nature Communications. “The brain is so compact it can fit on top of your shoulders, whereas a modern supercomputer takes up a space the size of two or three tennis courts.”

Like synapses connecting the neurons in the brain that can be reconfigured, the artificial neural networks the team is building can be reconfigured by applying a brief electric field to a sheet of graphene, the one-atomic-thick layer of carbon atoms. In this work they show at least 16 possible memory states, as opposed to the two in most oxide-based memristors, or memory resistors.

“What we have shown is that we can control a large number of memory states with precision using simple graphene field effect transistors,” Das said.

The team thinks that ramping up this technology to a commercial scale is feasible. With many of the largest semiconductor companies actively pursuing neuromorphic computing, Das believes they will find this work of interest.

In addition to Das and Shranghamer, the additional author on the paper, titled “Graphene Memristive Synapses for High Precision Neuromorphic Computing,” is Aaryan Oberoi, doctoral student in engineering science and mechanics.

Reference: 29 October 2020, Nature Communications.

The Army Research Office supported this work. The team has filed for a patent on this invention.