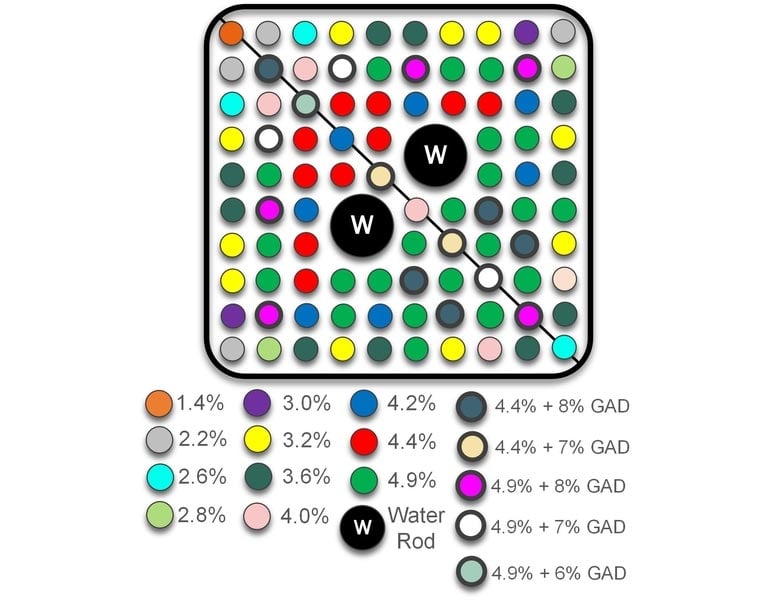

In this AI-designed layout for a boiling water reactor, fuel rods are ideally positioned around two fixed water rods to burn more efficiently. MIT researchers ran the equivalent of 36,000 simulations to find the optimal configurations, which could extend the life of the rods in an assembly by about 5 percent, generating $3 million in savings per year if scaled to the full reactor. Colors correspond to varying amounts of uranium and gadolinium oxide in each rod. Credit: Majdi Radaideh/MIT

Researchers show that deep reinforcement learning can be used to design more efficient nuclear reactors.

Nuclear energy provides more carbon-free electricity in the United States than solar and wind combined, making it a key player in the fight against climate change. But the U.S. nuclear fleet is aging, and operators are under pressure to streamline their operations to compete with coal- and gas-fired plants.

One of the key places to cut costs is deep in the reactor core, where energy is produced. If the fuel rods that drive reactions there are ideally placed, they burn less fuel and require less maintenance. Through decades of trial and error, nuclear engineers have learned to design better layouts to extend the life of pricey fuel rods. Now, artificial intelligence is poised to give them a boost.

Researchers at MIT and Exelon show that by turning the design process into a game, an AI system can be trained to generate dozens of optimal configurations that can make each rod last about 5 percent longer, saving a typical power plant an estimated $3 million a year, the researchers report. The AI system can also find optimal solutions faster than a human, and quickly modify designs in a safe, simulated environment. Their results were published in December 2020 in the journal Nuclear Engineering and Design.

“This technology can be applied to any nuclear reactor in the world,” says the study’s senior author, Koroush Shirvan, an assistant professor in MIT’s Department of Nuclear Science and Engineering. “By improving the economics of nuclear energy, which supplies 20 percent of the electricity generated in the U.S., we can help limit the growth of global carbon emissions and attract the best young talents to this important clean-energy sector.”

In a typical reactor, fuel rods are lined up on a grid, or assembly, by their levels of uranium and gadolinium oxide within, like chess pieces on a board, with radioactive uranium driving reactions, and rare-earth gadolinium slowing them down. In an ideal layout, these competing impulses balance out to drive efficient reactions. Engineers have tried using traditional algorithms to improve on human-devised layouts, but in a standard 100-rod assembly there might be an astronomical number of options to evaluate. So far, they’ve had limited success.

The researchers wondered if deep reinforcement learning, an AI technique that has achieved superhuman mastery at games like chess and Go, could make the screening process go faster. Deep reinforcement learning combines deep neural networks, which excel at picking out patterns in reams of data, with reinforcement learning, which ties learning to a reward signal like winning a game, as in Go, or reaching a high score, as in Super Mario Bros.

Here, the researchers trained their agent to position the fuel rods under a set of constraints, earning more points with each favorable move. Each constraint, or rule, picked by the researchers reflects decades of expert knowledge rooted in the laws of physics. The agent might score points, for example, by positioning low-uranium rods on the edges of the assembly, to slow reactions there; by spreading out the gadolinium “poison” rods to maintain consistent burn levels; and by limiting the number of poison rods to between 16 and 18.

“After you wire in rules, the neural networks start to take very good actions,” says the study’s lead author Majdi Radaideh, a postdoc in Shirvan’s lab. “They’re not wasting time on random processes. It was fun to watch them learn to play the game like a human would.”

Through reinforcement learning, AI has learned to play increasingly complex games as well as or better than humans. But its capabilities remain relatively untested in the real world. Here, the researchers show that reinforcement learning has potentially powerful applications.

“This study is an exciting example of transferring an AI technique for playing board games and video games to helping us solve practical problems in the world,” says study co-author Joshua Joseph, a research scientist at the MIT Quest for Intelligence.

Exelon is now testing a beta version of the AI system in a virtual environment that mimics an assembly within a boiling water reactor, and about 200 assemblies within a pressurized water reactor, which is globally the most common type of reactor. Based in Chicago, Illinois, Exelon owns and operates 21 nuclear reactors across the United States. It could be ready to implement the system in a year or two, a company spokesperson says.

Reference: “Physics-informed reinforcement learning optimization of nuclear assembly design” by Majdi I. Radaideh, Isaac Wolverton, Joshua Joseph, James J. Tusar, Uuganbayar Otgonbaatar, Nicholas Roy, Benoit Forget and Koroush Shirvan, 5 December 2020, Nuclear Engineering and Design.DOI: 10.1016/j.nucengdes.2020.110966

The study’s other authors are Isaac Wolverton, a MIT senior who joined the project through the Undergraduate Research Opportunities Program; Nicholas Roy and Benoit Forget of MIT; and James Tusar and Ugi Otgonbaatar of Exelon.