Exascale computing is the next milestone in the development of supercomputers. Able to process information much faster than today’s most powerful supercomputers, exascale computers will give scientists a new tool for addressing some of the biggest challenges facing our world, from climate change to understanding cancer to designing new kinds of materials.

Exascale computers are digital computers, roughly similar to today’s computers and supercomputers but with much more powerful hardware. This makes them different from quantum computers, which represent a completely new approach to building a computer suited to specific types of questions.

How does exascale computing compare to other computers? One way scientists measure computer performance is in floating point operations per second (FLOPS). These involve simple arithmetic like addition and multiplication problems. In general, a person can solve addition problems with pen and paper at a speed of 1 FLOP. That means it takes us one second to do one simple addition problem. Computers are much faster than people. Their performance in FLOPS has so many zeros researchers instead use prefixes. For example, the “giga” prefix means a number with nine zeros. A modern personal computer processor can operate in the gigaflop range, at about 150,000,000,000 FLOPS, or 150 gigaFLOPS. “Tera” means 12 zeros. Computers hit the terascale milestone in 1996 with the Department of Energy’s (DOE) Intel ASCI Red supercomputer. ASCI Red’s peak performance was 1,340,000,000,000 FLOPS, or 1.34 teraFLOPS.

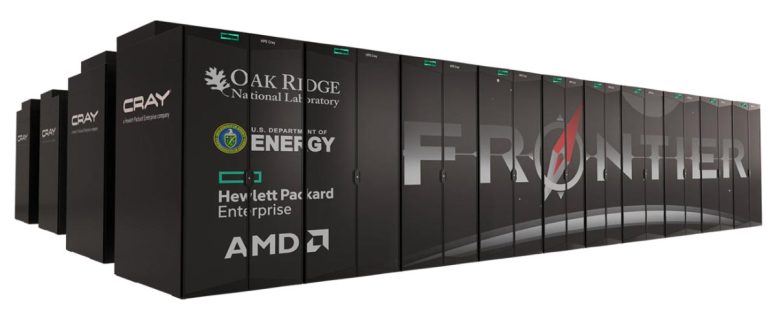

The Frontier supercomputer at Oak Ridge National Laboratory (ORNL) is expected to be the first exascale computer in the United States. Credit: Image courtesy of Oak Ridge National Laboratory

Exascale computing is unimaginably faster than that. “Exa” means 18 zeros. That means an exascale computer can perform more than 1,000,000,000,000,000,000 FLOPS, or 1 exaFLOP. That is more than one million times faster than ASCI Red’s peak performance in 1996.

Building a computer this powerful isn’t easy. When scientists started thinking seriously about exascale computers, they predicted these computers might need as much energy as up to 50 homes would use. That figure has been slashed, thanks to ongoing research with computer vendors. Scientists also need ways to ensure exascale computers are reliable, despite the huge number of components they contain. In addition, they must find ways to move data between processors and storage fast enough to prevent slowdowns.

Why do we need exascale computers? The challenges facing our world and the most complex scientific research questions need more and more computer power to solve. Exascale supercomputers will allow scientists to create more realistic Earth system and climate models. They will help researchers understand the nanoscience behind new materials. Exascale computers will help us build future fusion power plants. They will power new studies of the universe, from particle physics to the formation of stars. And these computers will help ensure the safety and security of the United States by supporting tasks such as the maintenance of our nuclear deterrent.

Fast Facts

- Watch a video of an exascale-powered COVID simulation

- from NVIDIA.

- Computers have been increasing steadily in performance since the 1940s.

- The Colossus vacuum tube computer was the first electronic computer in the world. Built in Britain during the Second World War, Colossus ran at 500,000 FLOPS.

- CDC 6600 in 1964 was the first supercomputer with 3 megaFLOPS.

- Cray-2 in 1985 was the first supercomputer to reach over 1 gigaFLOP.

- ASCI Red in 1996 was the first massively parallel computer reaching over a teraFLOP.

- Roadrunner in 2008 first supercomputer to reach 1 petaFLOP.

DOE Contributions to Exascale Computing

The Department of Energy (DOE) Office of Science’s Advanced Scientific Computing Research program has worked for decades with U.S. technology companies to build supercomputers that break barriers in scientific discovery. Lawrence Berkeley, Oak Ridge, and Argonne National Laboratories house DOE Office of Science user facilities for high-performance computing. These facilities give scientists computer access based on the potential benefits of their research. DOE’s Exascale Computing Initiative, co-led by the Office of Science and DOE’s National Nuclear Security Administration (NNSA), began in 2016 with the goal of speeding development of an exascale computing ecosystem. One of the components of the initiative is the seven-year Exascale Computing Project

. The project aims to prepare scientists and computing facilities for exascale. It focuses on three broad areas:

- Application Development: building applications that take full advantage of exascale computers.

- Software Technology: developing new tools for managing systems, handling massive amounts of data, and integrating future computers with existing computer systems.

- Hardware and Integration: establishing partnerships to create new components, new training, standards, and continuous testing to make these new tools function at our national labs and other facilities.

DOE is deploying the United States’ first exascale computers: Frontier at ORNL and Aurora at Argonne National Laboratory and El Capitan at Lawrence Livermore National Laboratory.

Source: SciTechDaily

I may need your help. I tried many ways but couldn’t solve it, but after reading your article, I think you have a way to help me. I’m looking forward for your reply. Thanks.