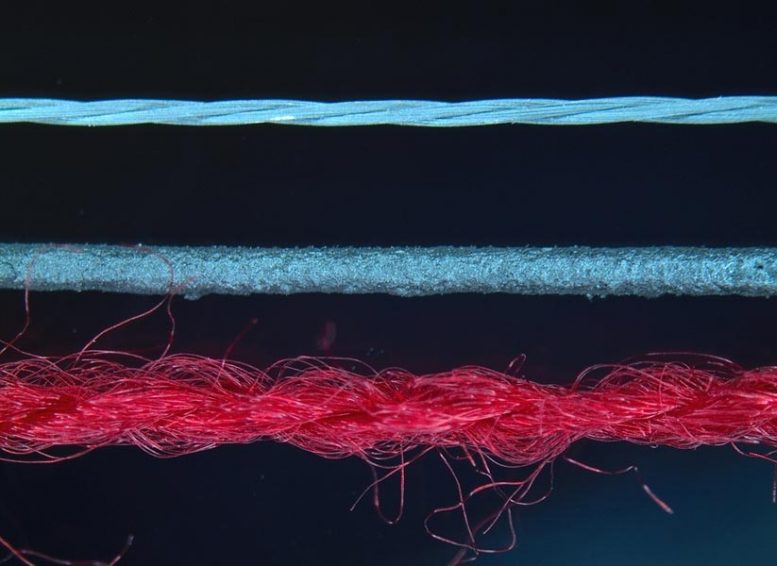

Tactile electronics developed at MIT use a mix of more typical textile fibers alongside a small amount of custom-made functional fibers that sense pressure from the person wearing the garment. Credit: Image courtesy of Nature Electronics.

By measuring a person’s movements and poses, smart clothes developed at MIT CSAIL could be used for athletic training, rehabilitation, or health-monitoring for elder-care facilities.

In recent years there have been exciting breakthroughs in wearable technologies, like smartwatches that can monitor your breathing and blood oxygen levels.

But what about a wearable that can detect how you move as you do a physical activity or play a sport, and could potentially even offer feedback on how to improve your technique?

And, as a major bonus, what if the wearable were something you’d actually already be wearing, like a shirt of a pair of socks?

That’s the idea behind a new set of MIT-designed clothing that use special fibers to sense a person’s movement via touch. Among other things, the researchers showed that their clothes can actually determine things like if someone is sitting, walking, or doing particular poses.

The group from MIT’s Computer Science and Artificial Intelligence Lab (CSAIL) says that their clothes could be used for athletic training and rehabilitation. With patients’ permission, they could even help passively monitor the health of residents in assisted-care facilities and determine if, for example, someone has fallen or is unconscious.

The researchers have developed a range of prototypes, from socks and gloves to a full vest. The team’s “tactile electronics” use a mix of more typical textile fibers alongside a small amount of custom-made functional fibers that sense pressure from the person wearing the garment.

According to CSAIL graduate student Yiyue Luo, a key advantage of the team’s design is that, unlike many existing wearable electronics, theirs can be incorporated into traditional large-scale clothing production. The machine-knitted tactile textiles are soft, stretchable, breathable, and can take a wide range of forms.

“Traditionally it’s been hard to develop a mass-production wearable that provides high-accuracy data across a large number of sensors,” says Luo, lead author on a new paper about the project that has been published in Nature Electronics. “When you manufacture lots of sensor arrays, some of them will not work and some of them will work worse than others, so we developed a self-correcting mechanism that uses a self-supervised machine learning algorithm to recognize and adjust when certain sensors in the design are off-base.”

The team’s clothes have a range of capabilities. Their socks predict motion by looking at how different sequences of tactile footprints correlate to different poses as the user transitions from one pose to another. The full-sized vest can also detect the wearers’ pose, activity, and the texture of the contacted surfaces.

The authors imagine a coach using the sensor to analyze people’s postures and give suggestions on improvement. It could also be used by an experienced athlete to record their posture so that beginners can learn from them. In the long term, they even imagine that robots could be trained to learn how to do different activities using data from the wearables.

“Imagine robots that are no longer tactilely blind, and that have ‘skins’ that can provide tactile sensing just like we have as humans,” says corresponding author Wan Shou, a postdoc at CSAIL. “Clothing with high-resolution tactile sensing opens up a lot of exciting new application areas for researchers to explore in the years to come.”

Reference: “Learning human–environment interactions using conformal tactile textiles” by Yiyue Luo, Yunzhu Li, Pratyusha Sharma, Wan Shou, Kui Wu, Michael Foshey, Beichen Li, Tomás Palacios, Antonio Torralba and Wojciech Matusik, 24 March 2021, Nature Electronics.DOI: 10.1038/s41928-021-00558-0

The paper was co-written by MIT professors Antonio Torralba, Wojciech Matusik, and Tomás Palacios, alongside PhD students Yunzhu Li, Pratyusha Sharma, and Beichen Li; postdoc Kui Wu; and research engineer Michael Foshey.

The work was partially funded by Toyota Research Institute.