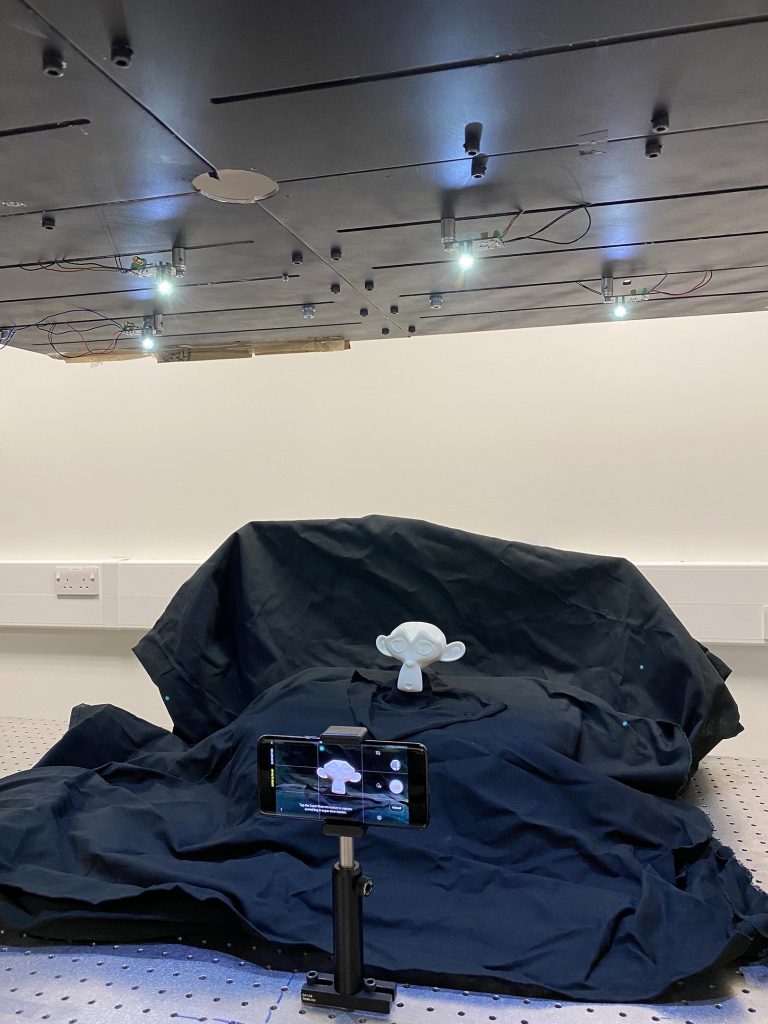

Researchers developed a way to use overhead LED lighting and a smartphone to create 3D images of a small figurine. Credit: Emma Le Francois, University of Strathclyde

Simple, yet smart LED illumination could provide 3D images for surveillance and robotic applications.

As LEDs replace traditional lighting systems, they bring more smart capabilities to everyday lighting. While you might use your smartphone to dim LED lighting at home, researchers have taken this further by tapping into dynamically controlled LEDs to create a simple illumination system for 3D imaging.

“Current video surveillance systems such as the ones used for public transport rely on cameras that provide only 2D information,” said Emma Le Francois, a doctoral student in the research group led by Martin Dawson, Johannes Herrnsdorf and Michael Strain at the University of Strathclyde in the UK. “Our new approach could be used to illuminate different indoor areas to allow better surveillance with 3D images, create a smart work area in a factory, or to give robots a more complete sense of their environment.”

In The Optical Society (OSA) journal Optics Express, the researchers demonstrate that 3D optical imaging can be performed with a cell phone and LEDs without requiring any complex manual processes to synchronize the camera with the lighting.

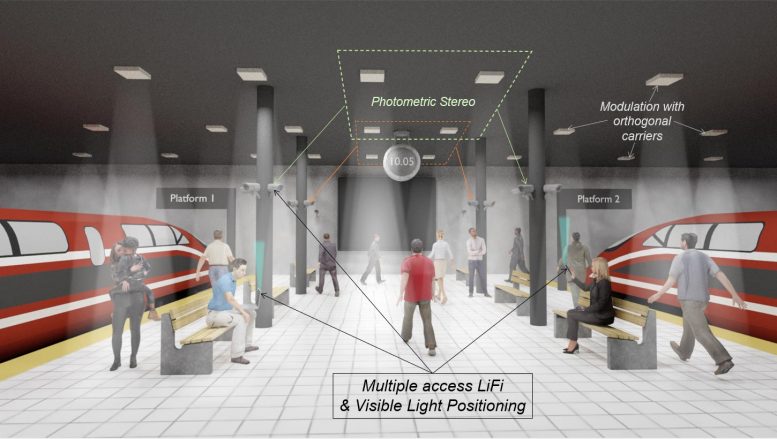

“Deploying a smart-illumination system in an indoor area allows any camera in the room to use the light and retrieve the 3D information from the surrounding environment,” said Le Francois. “LEDs are being explored for a variety of different applications, such as optical communication, visible light positioning and imaging. One day the LED smart-lighting system used for lighting an indoor area might be used for all of these applications at the same time.”

Illuminating from above

Human vision relies on the brain to reconstruct depth information when we view a scene from two slightly different directions with our two eyes. Depth information can also be acquired using a method called photometric stereo imaging in which one detector, or camera, is combined with illumination that comes from multiple directions. This lighting setup allows images to be recorded with different shadowing, which can then be used to reconstruct a 3D image.

Photometric stereo imaging traditionally requires four light sources, such as LEDs, which are deployed symmetrically around the viewing axis of a camera. In the new work, the researchers show that 3D images can also be reconstructed when objects are illuminated from the top down but imaged from the side. This setup allows overhead room lighting to be used for illumination.

In work supported under the UK’s EPSRC ‘Quantic’ research program, the researchers developed algorithms that modulate each LED in a unique way. This acts like a fingerprint that allows the camera to determine which LED generated which image to facilitate the 3D reconstruction. The new modulation approach also carries its own clock signal so that the image acquisition can be self-synchronized with the LEDs by simply using the camera to passively detect the LED clock signal.

“We wanted to make photometric stereo imaging more easily deployable by removing the link between the light sources and the camera,” said Le Francois. “To our knowledge, we are the first to demonstrate a top-down illumination system with a side image acquisition where the modulation of the light is self-synchronized with the camera.”

3D imaging with a smartphone

To demonstrate this new approach, the researchers used their modulation scheme with a photometric stereo setup based on commercially available LEDs. A simple Arduino board provided the electronic control for the LEDs. Images were captured using the high-speed video mode of a smartphone. They imaged a 48-millimeter-tall figurine that they 3D printed with a matte material to avoid any shiny surfaces that might complicate imaging.

In a public area, LEDs could be used for general lighting, visible light communication and 3D video surveillance. The illustration shows multiple access LiFi — wireless communication technology that uses light to transmit data and position between devices — and visible light positioning in a train station. Credit: Emma Le Francois, University of Strathclyde

After identifying the best position for the LEDs and the smartphone, the researchers achieved a reconstruction error of just 2.6 millimeters for the figurine when imaged from 42 centimeters away. This error rate shows that the quality of the reconstruction was comparable to that of other photometric stereo imaging approaches. They were also able to reconstruct images of a moving object and showed that the method is not affected by ambient light.

In the current system, the image reconstruction takes a few minutes on a laptop. To make the system practical, the researchers are working to decrease the computational time to just a few seconds by incorporating a deep-learning neural network that would learn to reconstruct the shape of the object from the raw image data.

Reference: “Synchronization-free top-down illumination photometric stereo imaging using light-emitting diodes and a mobile device” by Emma Le Francois, Johannes Herrnsdorf, Jonathan J. D. McKendry, Laurence Broadbent, Glynn Wright, Martin D. Dawson and Michael J. Strain, 11 January 2021, Optics Express.DOI: 10.1364/OE.408658