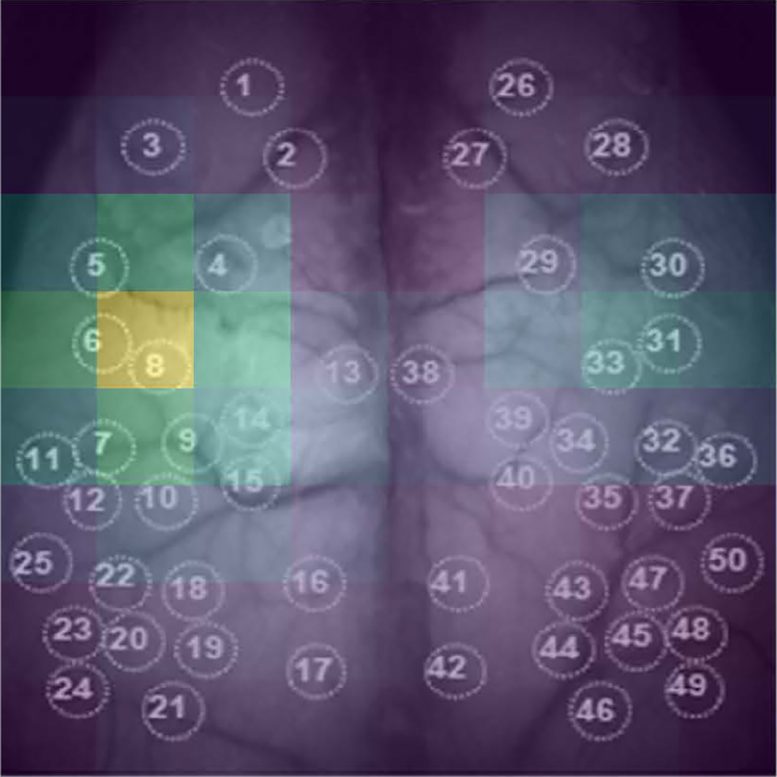

A new “end-to-end” deep learning method for the prediction of behavioral states uses whole-cortex functional imaging that do not require preprocessing or pre-specified features. Developed by medical student AJIOKA Takehiro and a team led by Kobe University’s TAKUMI Toru, their approach also allows them to identify which brain regions are most relevant for the algorithm (pictured). The ability to extract this information lays the foundation for future developments of brain-machine interfaces. Credit: Ajioka Takehiro

An AI image recognition algorithm can predict whether a mouse is moving or not based on brain functional imaging data. The researchers from Kobe University have also developed a method to identify which input data is relevant, shining light into the AI black box with the potential to contribute to brain-machine interface technology.

For the production of brain-machine interfaces, it is necessary to understand how brain signals and affected actions relate to each other. This is called “neural decoding,” and most research in this field is done on the brain cells’ electrical activity, which is measured by electrodes implanted into the brain. On the other hand, functional imaging technologies, such as

Breakthrough in Neural Decoding with AI

Kobe University medical student Ajioka Takehiro used the interdisciplinary expertise of the team led by neuroscientist Takumi Toru to tackle this issue. “Our experience with VR-based real-time imaging and motion tracking systems for mice and deep learning techniques allowed us to explore ‘end-to-end’ deep learning methods, which means that they don’t require preprocessing or pre-specified features, and thus assess cortex-wide information for neural decoding,” says Ajioka. They combined two different deep learning algorithms, one for spatial and one for temporal patterns, to whole-cortex film data from mice resting or running on a treadmill and trained their AI model to accurately predict from the cortex image data whether the mouse is moving or resting.

In the journal PLoS Computational Biology, the Kobe University researchers report that their model has an SciTechDaily