The University of Minnesota researchers have introduced a hardware innovation called CRAM, reducing AI energy use by up to 2,500 times by processing data within memory, promising significant advancements in AI efficiency.

This device could slash artificial intelligence energy consumption by at least 1,000 times.

Researchers in engineering at the University of Minnesota Twin Cities have developed an advanced hardware device that could decrease energy use in

Introduction of CRAM Technology

A team of researchers at the University of Minnesota College of Science and Engineering demonstrated a new model where the data never leaves the memory, called computational random-access memory (CRAM).

“This work is the first experimental demonstration of CRAM, where the data can be processed entirely within the memory array without the need to leave the grid where a computer stores information,” said Yang Lv, a University of Minnesota Department of Electrical and Computer Engineering postdoctoral researcher and first author of the paper.

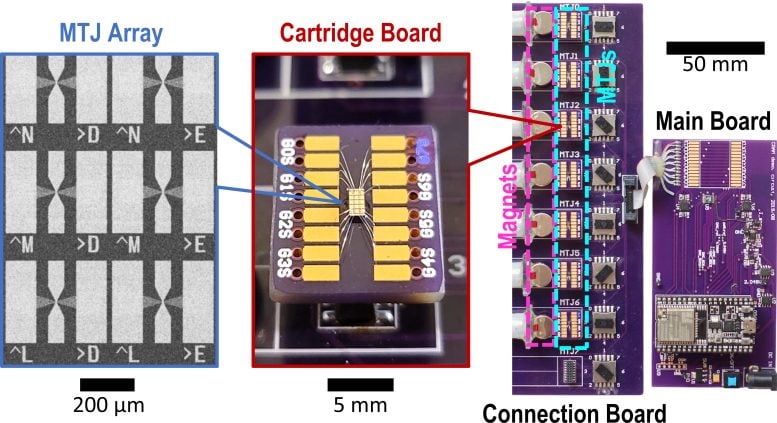

A custom-built hardware device plans to help artificial intelligence be more energy efficient. Credit: University of Minnesota Twin Cities

The International Energy Agency (IEA) issued a global energy use forecast in March of 2024, forecasting that energy consumption for AI is likely to double from 460 terawatt-hours (TWh) in 2022 to 1,000 TWh in 2026. This is roughly equivalent to the electricity consumption of the entire country of Japan.

According to the new paper’s authors, a CRAM-based SciTechDaily