A new artificial intelligence (AI) system has been developed to help ordinary untrained people to design and create applications and software for smartphones and personal computers. With the help of this system, non-designers can quickly and easily create a user-friendly mobile app.

A research team, led by Professor Sungahn Ko in the School of Electrical and Computer Engineering at UNIST has developed a deep learning-based artificial intelligence (AI) system that can provide design recommendations regarding the best layouts through the assessment of graphical user interfaces (GUIs) of the mobile application.

The graphical user interface (GUI) is a form of user interface that allows users to interact with electronic devices using graphical icons and other visual indicators. And thus, it is important to create an intuitive, convenient, and attractive user interface and user experience. Indeed, GUIs play a pivotal role in attracting potential customers, yet ordinary untrained people may face challenges while designing user-friendly GUIs. This was solved through artificial intelligence (AI).

As smartphones are becoming ubiquitous and pervasive in our daily lives, many things, like shopping, making travel inquiries, and SNS activities can be done from anywhere at any time using mobile apps. As a result, more and more people dream of starting their own businesses or vitalizing businesses using mobile apps. However, developing intuitive and user-friendly applications is a painstaking process for users with no relevant experience and guidance. This is particularly because the visual arrangement of icons and texts becomes more important due to the nature of the mobile environment, such as small screen size.

Professor Ko solved this issue with the use of deep-learning-based artificial intelligence (AI) and iterative design process. The new AI system is capable of studying the strengths and weaknesses of the existing GUI design patterns, evaluating the created GUIs for mobile apps, and suggesting alternatives.

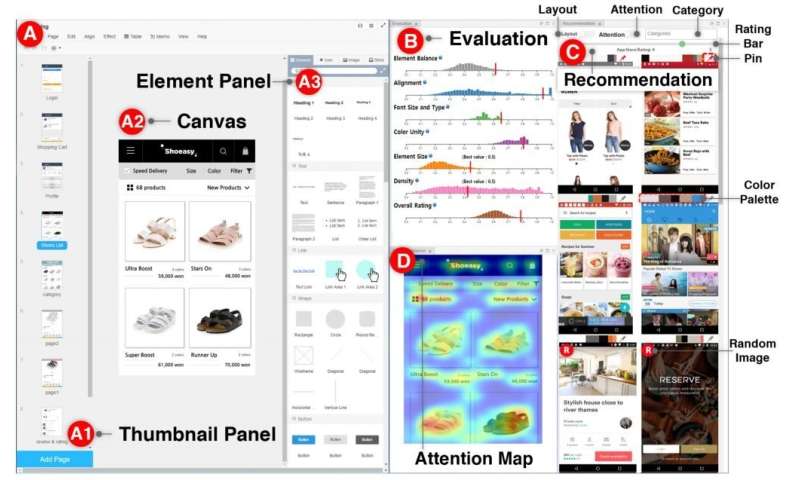

To this end, the research team conducted semi-structured interviews, based on which they built a GUI prototyping assistance tool, called GUIComp by integrating three different types of feedback mechanisms: recommendation, evaluation, and attention. According to the research team, this tool can be connected to GUI design software as an extension, and it provides real-time, multi-faceted feedback on a user’s current design. Additionally, the research team conducted two user studies, in which they asked participants to create mobile GUIs with or without GUIComp, and requested online workers to assess the created GUIs. The experimental results show that GUIComp facilitated iterative design and the participants with GUIComp had better a user experience and produced more acceptable designs than those who did not.

“We applied deep learning techniques for the design recommendation process and eye-tracker calibration,” says Chunggi Lee (School of Electrical and Computer Engineering, UNIST) , the first author of the study. “In particular, we used K-nearest neighbor Algorithm and Stacked Autoencoder (SAE) to provide real-time, multifaceted feedback on a user’s current design.”

“We have put much effort into visualization to help resolve issues that users with no experience face during the designing process of user-friendly GUIs,” says Professor Ko. “By securing quality data, this is expected to be applied to the educational sector, such as web development and painting.”

The findings of this study have been published in ACM Conference on Human Factors in Computing Systems.