Machine learning is the process by which computers adapt their responses without human intervention. This form of artificial intelligence (AI) is now common in everyday tools such as virtual assistants and is being developed for use in areas from medicine to agriculture. A challenge posed by the rapid expansion of machine learning is the high energy demand of the complex computing processes. Researchers from The University of Tokyo have reported the first integration of a mobility-enhanced field-effect transistor (FET) and a ferroelectric capacitor (FE-CAP) to bring the memory system into the proximity of a microprocessor and improve the efficiency of the data-intensive computing system. Their findings were presented at the 2021 Symposium on VLSI Technology.

Memory cells require both a memory component and an access transistor. In currently available examples, the access transistors are generally silicon-metal-oxide semiconductor FETs. While the memory elements can be formed in the ‘back end of line’ (BEOL) layers, the access transistors need to be formed in what are known as the ‘front end of line’ layers of the integrated circuit, which isn’t a good use of this space.

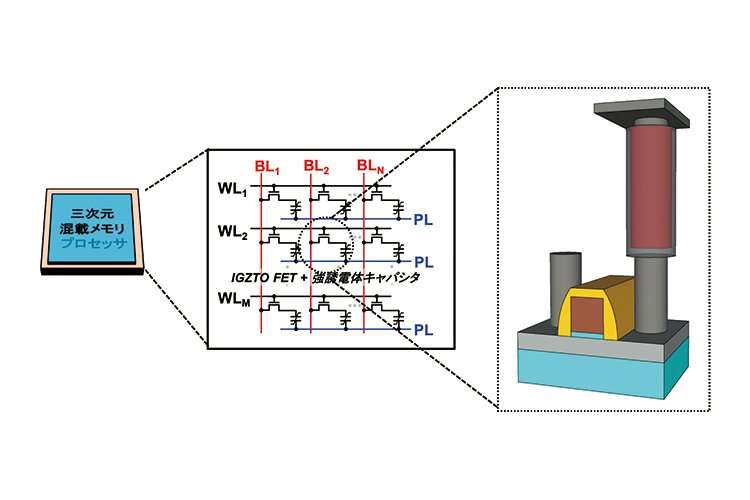

In contrast, oxide semiconductors such as indium gallium zinc oxide (IGZO) can be included in BEOL layers because they can be processed at low temperatures. By incorporating both the access transistor and the memory into a single monolith in the BEOL, high-density, energy-efficient embedded memory can be achieved directly on a microprocessor.

The researchers used IGZO doped with tin (IGZTO) for both the oxide semiconductor FET and ferroelectric capacitor (FE-cap) to create 3-D embedded memory.

“In light of the high mobility and excellent reliability of our previously reported IGZO FET, we developed a tin-doped IGZTO FET,” explains study first author Jixuan Wu. “We then integrated the IGZTO FET with an FE-cap to introduce its scalable properties.”

Both the drive current and the effective mobility of the IGZTO FET were twice those of the IGZO FET without tin. Because the mobility of the oxide semiconductor must be high enough to drive the FE-cap, introducing the tin ensures successful integration.

“The proximity achieved with our design will significantly reduce the distance that signals must travel, which will speed up learning and inference processes in AI computing, making them more energy efficient,” study author Masaharu Kobayashi explains. “We believe our findings provide another step towards hardware systems that can support future AI applications of higher complexity.”