In the quest to train robots for real-world tasks, researchers have created “Holodeck,” an AI system capable of generating detailed, customizable 3D environments on demand, inspired by Star Trek’s holodeck technology. This system uses large language models to interpret user requests and generate a vast array of indoor scenarios, helping robots learn to navigate new spaces more effectively. (Artist’s concept.) Credit: SciTechDaily.com

Researchers at the University of Pennsylvania and AI2 have developed “Holodeck,” an advanced system capable of generating a wide range of virtual environments for training AI agents.

In Star Trek: The Next Generation, Captain Picard and the crew of the U.S.S. Enterprise utilize the holodeck, an empty room capable of generating three-dimensional environments, for mission preparation and entertainment. This technology simulates everything from lush jungles to Sherlock Holmes’ London. These deeply immersive and fully interactive environments are infinitely customizable; the crew simply requests a specific setting from the computer, and it materializes in the holodeck.

Today, virtual interactive environments are also used to train robots prior to real-world deployment in a process called “Sim2Real.” However, virtual interactive environments have been in surprisingly short supply. “Artists manually create these environments,” says Yue Yang, a doctoral student in the labs of Mark Yatskar and Chris Callison-Burch, Assistant and Associate Professors in Computer and Information Science (CIS), respectively. “Those artists could spend a week building a single environment,” Yang adds, noting all the decisions involved, from the layout of the space to the placement of objects to the colors employed in rendering.

Challenges in Creating Virtual Training Environments

That paucity of virtual environments is a problem if you want to train robots to navigate the real world with all its complexities. Neural networks, the systems powering today’s AI revolution, require massive amounts of data, which in this case means simulations of the physical world. “Generative AI systems like ChatGPT are trained on trillions of words, and image generators like Midjourney and DALLE are trained on billions of images,” says Callison-Burch. “We only have a fraction of that amount of 3D environments for training so-called ‘embodied AI.’ If we want to use generative AI techniques to develop robots that can safely navigate in real-world environments, then we will need to create millions or billions of simulated environments.”

Using everyday language, users can prompt Holodeck to generate a virtually infinite variety of 3D spaces, which creates new possibilities for training robots to navigate the world. Credit: Yue Yang

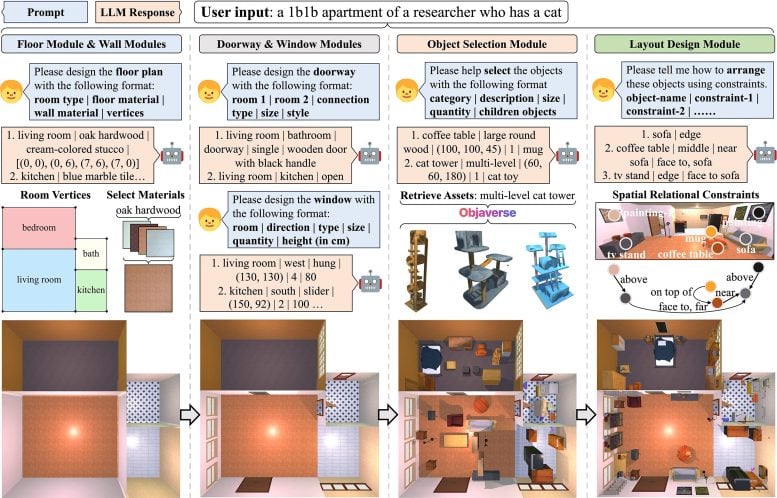

Enter Holodeck, a system for generating interactive 3D environments co-created by Callison-Burch, Yatskar, Yang and Lingjie Liu, Aravind K. Joshi Assistant Professor in CIS, along with collaborators at Stanford, the

Essentially, Holodeck engages a large language model (LLM) in a conversation, building a virtual environment piece by piece. Credit: Yue Yang

To evaluate Holodeck’s abilities, in terms of their realism and SciTechDaily