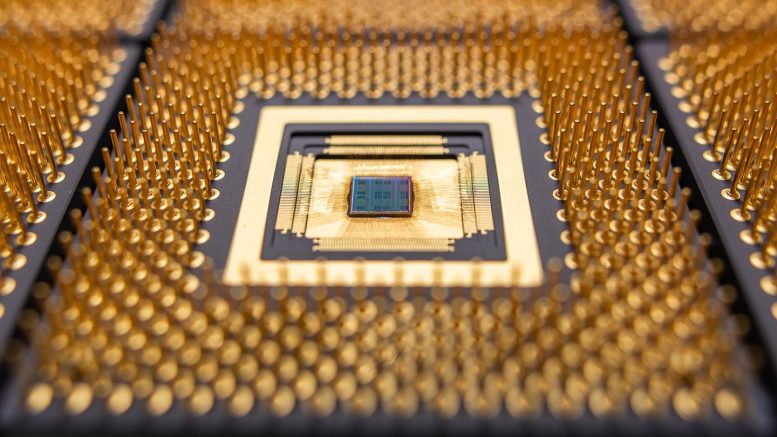

Princeton researchers have totally reimagined the physics of computing to build a chip for modern AI workloads, and with new U.S. government backing they will see how fast, compact and power-efficient this chip can get. An early prototype is pictured above. Credit: Hongyang Jia/Princeton University

Princeton’s advanced AI chip project, backed by

Revolutionizing AI Deployment

Chips that require less energy can be deployed to run AI in more dynamic environments, from laptops and phones to hospitals and highways to low-Earth orbit and beyond. The kinds of chips that power today’s most advanced models are too bulky and inefficient to run on small devices, and are primarily constrained to server racks and large data centers.

Now, the Defense Advanced Research Projects Agency, or DARPA, has announced it will support Verma’s work, based on a suite of key inventions from his lab, with an $18.6 million grant. The DARPA funding will drive an exploration into how fast, compact and power-efficient the new chip can get.

“There’s a pretty important limitation with the best AI available just being in the data center,” Verma said. “You unlock it from that and the ways in which we can get value from AI, I think, explode.”

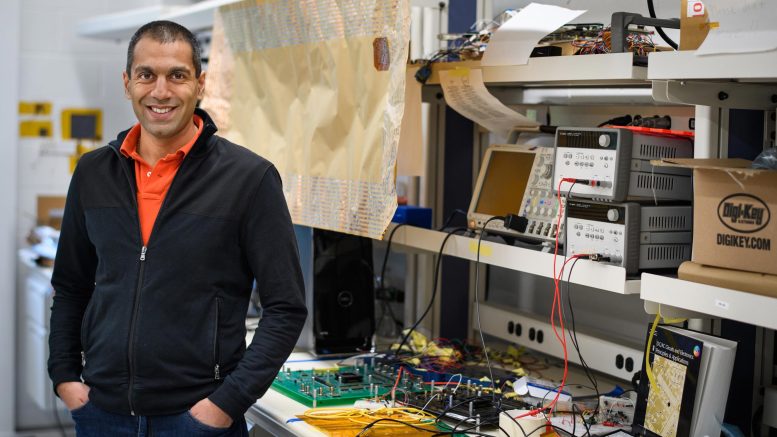

Professor Naveen Verma will lead a U.S.-backed project to supercharge AI hardware based on a suite of key inventions from his Princeton laboratory. Credit: Sameer A. Khan/Fotobuddy

The announcement came as part of a broader effort by DARPA to fund “revolutionary advances in science, devices, and systems” for the next generation of AI computing. The program, called OPTIMA, includes projects across multiple universities and companies. The program’s call for proposals estimated total funding at $78 million, although DARPA has not disclosed the full list of institutions or the total amount of funding the program has awarded to date.

The Emergence of EnCharge AI

In the Princeton-led project, researchers will collaborate with Verma’s startup, EnCharge AI. Based in Santa Clara, Calif., EnCharge AI is commercializing technologies based on discoveries from Verma’s lab, including several key papers he co-wrote with electrical engineering graduate students going back as far as 2016.

Encharge AI “brings leadership in the development and execution of robust and scalable mixed-signal computing architectures,” according to the project proposal. Verma co-founded the company in 2022 with Kailash Gopalakrishnan, a former IBM Fellow, and Echere Iroaga, a leader in semiconductor systems design.

Gopalakrishnan said that innovation within existing computing architectures, as well as improvements in silicon technology, began slowing at exactly the time when AI began creating massive new demands for computation power and efficiency. Not even the best graphics processing unit (GPU), used to run today’s AI systems, can mitigate the bottlenecks in memory and computing energy facing the industry.

“While GPUs are the best available tool today,” he said, “we concluded that a new type of chip will be needed to unlock the potential of AI.”

Transforming AI Computing Landscape

Between 2012 and 2022, the amount of computing power required by AI models grew by about 1 million percent, according to Verma, who is also director of the Keller Center for Innovation in Engineering Education at SciTechDaily