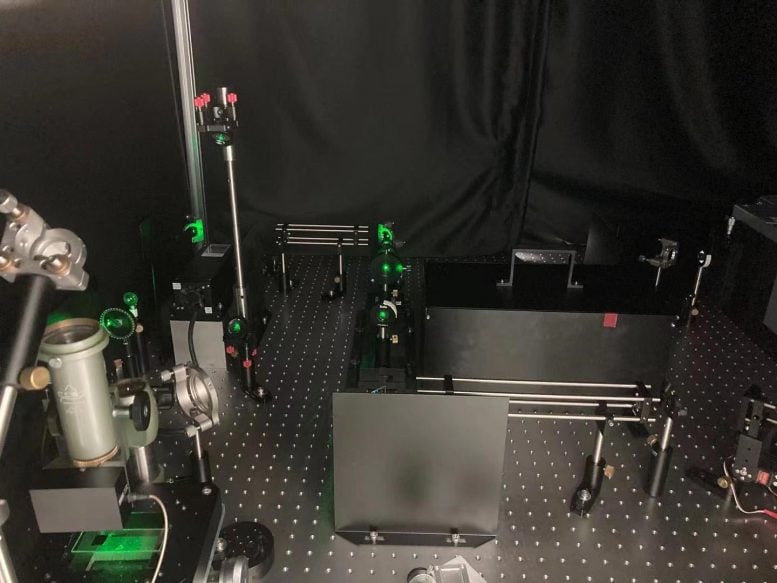

The new approach for digitizing color can be applied to cameras, displays and LED lighting. Because the color space studied isn’t device dependent, the same values should be perceived as the same color even if different devices are used. Pictured is a corner of the optical setup built by the researchers. Credit: Min Qiu’s PAINT research group, Westlake University

Method could help improve color for electronic displays and create more natural LED lighting.

If you’ve ever tried to capture a sunset with your smartphone, you know that the colors don’t always match what you see in real life. Researchers are coming closer to solving this problem with a new set of algorithms that make it possible to record and display color in digital images in a much more realistic fashion.

“When we see a beautiful scene, we want to record it and share it with others,” said Min Qiu, leader of the Laboratory of Photonics and Instrumentation for Nano Technology (PAINT) at Westlake University in China. “But we don’t want to see a digital photo or video with the wrong colors. Our new algorithms can help digital camera and electronic display developers better adapt their devices to our eyes.”

In Optica, The Optical Society’s (OSA) journal for high impact research, Qiu and colleagues describe a new approach for digitizing color. It can be applied to cameras and displays — including ones used for computers, televisions, and mobile devices — and used to fine-tune the color of LED lighting.

“Our new approach can improve today’s commercially available displays or enhance the sense of reality for new technologies such as near-eye-displays for virtual reality and augmented reality glasses,” said Jiyong Wang, a member of the PAINT research team. “It can also be used to produce LED lighting for hospitals, tunnels, submarines, and airplanes that precisely mimics natural sunlight. This can help regulate circadian rhythm in people who are lacking sun exposure, for example.”

Researchers developed algorithms that correlate digital signals with colors in a standard CIE color space. The video shows how various colors are created in the CIE 1931 chromatic diagram by mixing three colors of light. Credit: Min Qiu’s PAINT research group, Westlake University

Mixing digital color

Digital colors such as the ones on a television or smartphone screen are typically created by combining red, green, and blue (RGB), with each color assigned a value. For example, an RGB value of (255, 0, 0) represents pure red. The RGB value reflects a relative mixing ratio of three primary lights produced by an electronic device. However, not all devices produce this primary light in the same way, which means that identical RGB coordinates can look like different colors on different devices.

There are also other ways, or color spaces, used to define colors such as hue, saturation, value (HSV) or cyan, magenta, yellow and black (CMYK). To make it possible to compare colors in different color spaces, the International Commission on Illumination (CIE) issued standards for defining colors visible to humans based on the optical responses of our eyes. Applying these standards requires scientists and engineers to convert digital, computer-based color spaces such as RGB to CIE-based color spaces when designing and calibrating their electronic devices.

In the new work, the researchers developed algorithms that directly correlate digital signals with the colors in a standard CIE color space, making color space conversions unnecessary. Colors, as defined by the CIE standards, are created through additive color mixing. This process involves calculating the CIE values for the primary lights driven by digital signals and then mixing those together to create the color. To encode colors based on the CIE standards, the algorithms convert the digital pulsed signals for each primary color into unique coordinates for the CIE color space. To decode the colors, another algorithm extracts the digital signals from an expected color in the CIE color space.

“Our new method maps the digital signals directly to a CIE color space,” said Wang. “Because such color space isn’t device dependent, the same values should be perceived as the same color even if different devices are used. Our algorithms also allow other important properties of color such as brightness and chromaticity to be treated independently and precisely.”

Creating precise colors

The researchers tested their new algorithms with lighting, display, and sensing applications that involved LEDs and lasers. Their results agreed very well with their expectations and calculations. For example, they showed that chromaticity, which is a measure of colorfulness independent of brightness, could be controlled with a deviation of just ~0.0001 for LEDs and 0.001 for lasers. These values are so small that most people would not be able to perceive any differences in color.

The researchers say that the method is ready to be applied to LED lights and commercially available displays. However, achieving the ultimate goal of reproducing exactly what we see with our eyes will require solving additional scientific and technical problems. For example, to record a scene as we see it, color sensors in a digital camera would need to respond to light in the same way as the photoreceptors in our eyes.

To further build on their work, the researchers are using state-of-art nanotechnologies to enhance the sensitivity of color sensors. This could be applied for artificial vision technologies to help people who have color blindness, for example.

Reference: “Nonlinear Color Space Coded by Additive Digital Pulses” by Ni Tang, Lei Zhang, Jianbin Zhou, Jiandong Yu, Boqu Chen, YUXIN PENG, Xiaoqing Tian, Wei Yan, Jiyong Wang and Min Qiu, 1 July 2021, Optica.DOI: