In two recently published research papers, computer scientists from the University of Luxembourg and international partners show how mouse movements can be used to gain additional knowledge about the user behavior. While this has many interesting applications, mouse movements can also reveal sensitive information about the users such as their age or gender. Scientists want to raise awareness about these potential privacy issues and have proposed measures to mitigate them.

Prof. Luis Leiva from the University of Luxembourg and corresponding author of the two papers explains in more details the key findings.

My mouse, my rules

“We have demonstrated how straightforward it is to capture behavioral data about the users at scale, by unobtrusively tracking their mouse cursor movements, and predict user’s demographics information with reasonable accuracy using five lines of code. For years, recording mouse movements on websites has been easy, however to analyze them one would need advanced expertise in computer science and machine learning. Today, there are many libraries and frameworks that allows anyone with a minimum of programming knowledge to create rather sophisticated classifiers. This raises new privacy issues and users do not have an easy opt -out mechanism.”

Based on their results, the team developed a method to prevent mouse tracking by distorting the mouse coordinates in real-time. “It is inspired by recent research in adversarial machine learning, and has been implemented as a web browser extension, so that anyone can benefit from this work in practice,” explains Leiva. The web browser extension called MouseFaker is available on Github.

This work has been presented at the 6th ACM SIGIR Conference on Human Information Interaction and Retrieval.

When choice happens

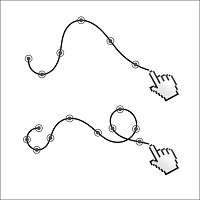

Nevertheless, mouse tracking has very practical applications for webmasters, and in particular for search engines. Dr. Ioannis Aparakis from Telefonica Research and co-author of both publications, clarifies: “When you search for something at Google or Bing, your mouse movements are sending a tiny signal to the search engine indicating if you are interested or not in the content you have been shown. As mouse tracking may have privacy issues, we investigated the possibility of recording only a small part of the whole movement trajectory and see if we can still infer how people make choices in web search.”

The team analyzed three representative scenarios where users had to make a choice on web search engines: when they notice an advertisement, when they abandon the page, and when they become frustrated. The results are interesting: if users pay attention to an ad, it will be signaled by the initial mouse movements. In case of page abandonment, it is actually the opposite: the last movements inform whether the user has decided to leave either if they were satisfied with the search results or not, without having to click on anything. In the frustration case, results were mixed but it seemed the middle part of a mouse movement trajectory provides more information than the initial or final parts.

The researchers found that it is possible to predict the aforementioned tasks sometimes using just two or three seconds of mouse movement. Therefore, they conclude that, by only tracking the interesting parts, search engines could get useful information and improve their services while respecting the users’ privacy. This work will be presented at the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval.

Prof. Leiva says, “By efficiently recording the right amount of movement data, we can save valuable bandwidth and storage, respect the user’s privacy, and increase the speed at which machine learning models can be trained and deployed. Considering the web scale, doing so will have a net benefit on our environment.”

When Choice Happens: A Systematic Examination of Mouse Movement Length for Decision Making in Web Search, Proceedings of the International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR), DOI: 10.1145/3404835.3463055