If you’re a fan of spy movies, you’ve probably come across scenes where the intelligence agents try to identify or detect a perpetrator using some sophisticated image enhancement technology on surveillance camera images. While the idea behind surveillance cameras and object detection is the same in real life, unlike in movies, there is often a trade-off between the camera’s field of view and its resolution.

Surveillance cameras are typically required to have a wide field of view to make the detection of a threat more likely. So omnidirectional cameras allowing a 360-degree capture range have become a popular choice, for the obvious reason that they leave out no blind spot; they are also cheap to install. However, recent studies on object recognition in omnidirectional cameras show that distant objects captured in these cameras have poor resolution, making their identification difficult. While increasing the resolution is an obvious solution, the minimum resolution required, according to a study, is 4K (3840 x 2160 pixels), which translates to enormous bitrate requirements and a need for efficient image compression.

Moreover, 3D omnidirectional images often cannot be processed in raw form due to lens distortion effects and must be projected onto 2D first. “Continuous processing under high computational loads incurred by tasks such as moving object detection combined with converting a 360-degree video at 4K or higher resolutions into 2D images is simply infeasible in terms of real-life performance and installation costs,” says Dr. Chinthaka Premachandra from Shibaura Institute of Technology (SIT), Japan, who researches image processing.

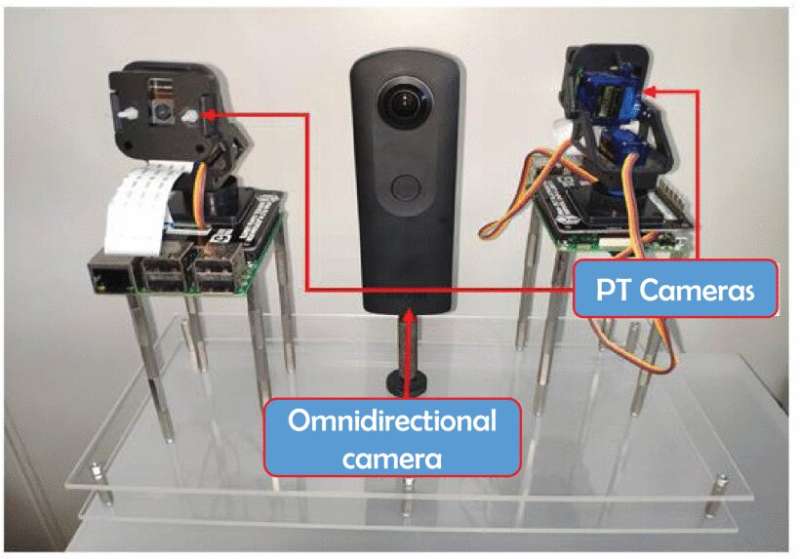

Addressing this issue in his latest study published in IEEE Sensors Journal, Dr. Premachandra, along with his colleague Masaya Tamaki from SIT, considered a system in which an omnidirectional camera would be used to locate a region of interest while a separate camera would capture its high-resolution image, thus allowing highly accurate object identification without incurring large computation costs. Accordingly, they constructed a hybrid camera platform consisting of an omnidirectional camera and a pan-tilt (PT) camera with 180-degree field of view kept on either side of it. The omnidirectional camera itself comprised two fisheye lenses sandwiching the camera body, with each lens covering a 180-degree capture range.

The researchers used Raspberry Pi Camera Modules v. 2.1 as PT cameras on which they mounted a pan-tilt module and connected the system to a Raspberry Pi 3 Model B. Finally, they connected the whole system, the omnidirectional camera, the PT cameras and the Raspberry Pi, to a personal computer for overall control.

The operational flow was as follows: The researchers first processed an omnidirectional image to extract a target region, following which its coordinate information was converted into angle information (pan and tilt angles) and subsequently transferred to the Raspberry Pi. The Raspberry Pi, in turn, controlled each PT camera based on this information and determined whether a complementary image was to be taken.

The researchers mainly performed four types of experiments to demonstrate the performance in four different aspects of the camera platform and separate experiments to verify the image capturing performance for different target object locations.

While they contemplate that a potential issue could arise from capturing moving objects for which the complementary images could be shifted due to time delay in image acquisition, they have proposed a potential countermeasure—introducing a Kalman filtering technique to predict the future coordinates of the object when capturing images.

“We expect that our camera system will create positive impacts on future applications employing omnidirectional imaging such as robotics, security systems, and monitoring systems,” says Dr. Premachandra.