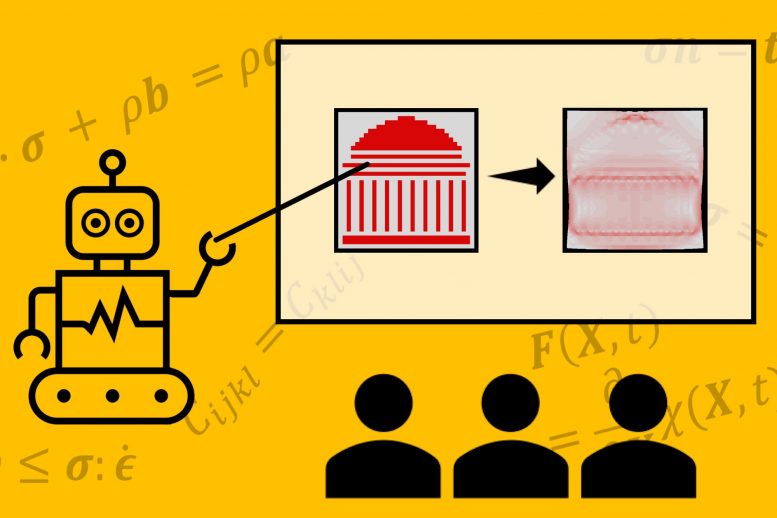

MIT researchers have developed a machine-learning technique that uses an image of the material’s internal structure to estimate the stresses and strains acting on the material. Credit: Courtesy of the researchers

The advance could accelerate engineers’ design process by eliminating the need to solve complex equations.

Isaac Newton may have met his match.

For centuries, engineers have relied on physical laws — developed by Newton and others — to understand the stresses and strains on the materials they work with. But solving those equations can be a computational slog, especially for complex materials.

MIT researchers have developed a technique to quickly determine certain properties of a material, like stress and strain, based on an image of the material showing its internal structure. The approach could one day eliminate the need for arduous physics-based calculations, instead relying on computer vision and machine learning to generate estimates in real time.

The researchers say the advance could enable faster design prototyping and material inspections. “It’s a brand new approach,” says Zhenze Yang, adding that the algorithm “completes the whole process without any domain knowledge of physics.”

The research appears today in the journal Science Advances. Yang is the paper’s lead author and a PhD student in the Department of Materials Science and Engineering. Co-authors include former MIT postdoc Chi-Hua Yu and Markus Buehler, the McAfee Professor of Engineering and the director of the Laboratory for Atomistic and Molecular Mechanics.

Engineers spend lots of time solving equations. They help reveal a material’s internal forces, like stress and strain, which can cause that material to deform or break. Such calculations might suggest how a proposed bridge would hold up amid heavy traffic loads or high winds. Unlike Sir Isaac, engineers today don’t need pen and paper for the task. “Many generations of mathematicians and engineers have written down these equations and then figured out how to solve them on computers,” says Buehler. “But it’s still a tough problem. It’s very expensive — it can take days, weeks, or even months to run some simulations. So, we thought: Let’s teach an AI to do this problem for you.”

The researchers turned to a machine learning technique called a Generative Adversarial Neural Network. They trained the network with thousands of paired images — one depicting a material’s internal microstructure subject to mechanical forces, and the other depicting that same material’s color-coded stress and strain values. With these examples, the network uses principles of game theory to iteratively figure out the relationships between the geometry of a material and its resulting stresses.

“So, from a picture, the computer is able to predict all those forces: the deformations, the stresses, and so forth,” Buehler says. “That’s really the breakthrough — in the conventional way, you would need to code the equations and ask the computer to solve partial differential equations. We just go picture to picture.”

This visualization shows the deep-learning approach in predicting physical fields given different input geometries. The left figure shows a varying geometry of the composite in which the soft material is elongating, and the right figure shows the predicted mechanical field corresponding to the geometry in the left figure. Credit: MIT

That image-based approach is especially advantageous for complex, composite materials. Forces on a material may operate differently at the atomic scale than at the macroscopic scale. “If you look at an airplane, you might have glue, a metal, and a polymer in between. So, you have all these different faces and different scales that determine the solution,” say Buehler. “If you go the hard way — the Newton way — you have to walk a huge detour to get to the answer.”

But the researcher’s network is adept at dealing with multiple scales. It processes information through a series of “convolutions,” which analyze the images at progressively larger scales. “That’s why these neural networks are a great fit for describing material properties,” says Buehler.

The fully trained network performed well in tests, successfully rendering stress and strain values given a series of close-up images of the microstructure of various soft composite materials. The network was even able to capture “singularities,” like cracks developing in a material. In these instances, forces and fields change rapidly across tiny distances. “As a material scientist, you would want to know if the model can recreate those singularities,” says Buehler. “And the answer is yes.”

This visualization shows the simulated failure in a complicated material by a machine-learning-based approach without solving governing equations of mechanics. The red represents a soft material, white represents a brittle material, and green represents a crack. Credit: MIT

The advance could “significantly reduce the iterations needed to design products,” according to Suvranu De, a mechanical engineer at Rensselaer Polytechnic Institute who was not involved in the research. “The end-to-end approach proposed in this paper will have a significant impact on a variety of engineering applications — from composites used in the automotive and aircraft industries to natural and engineered biomaterials. It will also have significant applications in the realm of pure scientific inquiry, as force plays a critical role in a surprisingly wide range of applications from micro/nanoelectronics to the migration and differentiation of cells.”

In addition to saving engineers time and money, the new technique could give nonexperts access to state-of-the-art materials calculations. Architects or product designers, for example, could test the viability of their ideas before passing the project along to an engineering team. “They can just draw their proposal and find out,” says Buehler. “That’s a big deal.”

Once trained, the network runs almost instantaneously on consumer-grade computer processors. That could enable mechanics and inspectors to diagnose potential problems with machinery simply by taking a picture.

In the new paper, the researchers worked primarily with composite materials that included both soft and brittle components in a variety of random geometrical arrangements. In future work, the team plans to use a wider range of material types. “I really think this method is going to have a huge impact,” says Buehler. “Empowering engineers with AI is really what we’re trying to do here.”

Reference: “Deep learning model to predict complex stress and strain fields in hierarchical composites” by Zhenze Yang, Chi-Hua Yu and Markus J. Buehler, 9 April 2021, Science Advances.DOI: 10.1126/sciadv.abd7416

Funding for this research was provided, in part, by the Army Research Office and the Office of Naval Research.